The Product Engineering Evolution, Part 1: The Implications of Legacy Code

Revenge of the COBOL!

In Ready Player 3, we argued that Product Engineering isn’t a mere shift in methodology but a fundamental rethinking of how we bring products to life. Gone are the days when product management and engineering operated in silos, each defining "value" from different perspectives. Product Engineering breaks down these walls, creating a world where strategy, design, development, and customer insights converge into a single agile workflow–and indeed, sometimes a single person–amplified by the capabilities of new artificial intelligence tools.

Consider a product team at a fast-growing tech startup today. Just a few years ago, their roles would have looked entirely different: a product manager mapping strategy, creating roadmaps, and defining backlogs; an engineering team developing, delivering, and maintaining code; and designers guiding the product teams to deliver a delightful user experience.

Now, with AI tools like Anthropic’s Claude or OpenAI’s ChatGPT frontier large language models, GitHub Copilot, and Amazon CodeGuru, anyone with vision and an idea can potentially build and deploy software in record time—no need for formal coding skills or deep development experience. That same visionary can create and execute a comprehensive go-to-market plan, including brand, content, and product marketing; pricing and packaging; and the structure, tools, and team mechanics to establish a high-performing sales organization. In this world, five individuals can deliver the kind of impact that once required a team of fifty.

However, Product Engineering collapses overlapping and once-distinct roles. It redefines the “what,” “why,” “how,” and “who” in product development, raising complex questions about integrating legacy systems into this new approach. How can legacy infrastructure adapt to the flexibility and speed that Product Engineering demands? What happens to code created in collaboration with AI tools when applied to modernize outdated systems?

Let’s consider the impact of Product Engineering on legacy systems so that you, as tech leaders, develop insights into the challenges ahead—and strategies to address them.

Legacy Code Migration: A Race Against Time

The global financial industry runs on legacy code.

When it released Excel 5.0 in 1993, Microsoft introduced Visual Basic for Applications (VBA).

That year, Radiohead and Wu-Tang Clan debuted epoch-defining albums that forever changed alternative and hip-hop. A pre-scandal, pre-cancer (but probably not pre-doping) Lance Armstrong won cycling’s world road racing championship in Oslo, Norway.

Every morning since, financial professionals worldwide have watched Excel spreadsheets loaded with macros crawl to life, running risk models, rebalancing portfolios, and planning the day’s trades. Most of this code is decades old, and no one currently using it knows who developed it or how it works.

Thirty-three years prior, in 1960, the Committee on Data Systems Language, or CODASYL, released COBOL.

In 1960, Elvis was killing it with It’s Now or Never, Chubby Checker had everyone doing The Twist, and Belgium’s Rik Van Looy traveled through the Iron Curtain to East Germany, where he won the first of two consecutive world road racing championships. Remember when East Germany was behind the Iron Curtain? So do COBOL programmers.

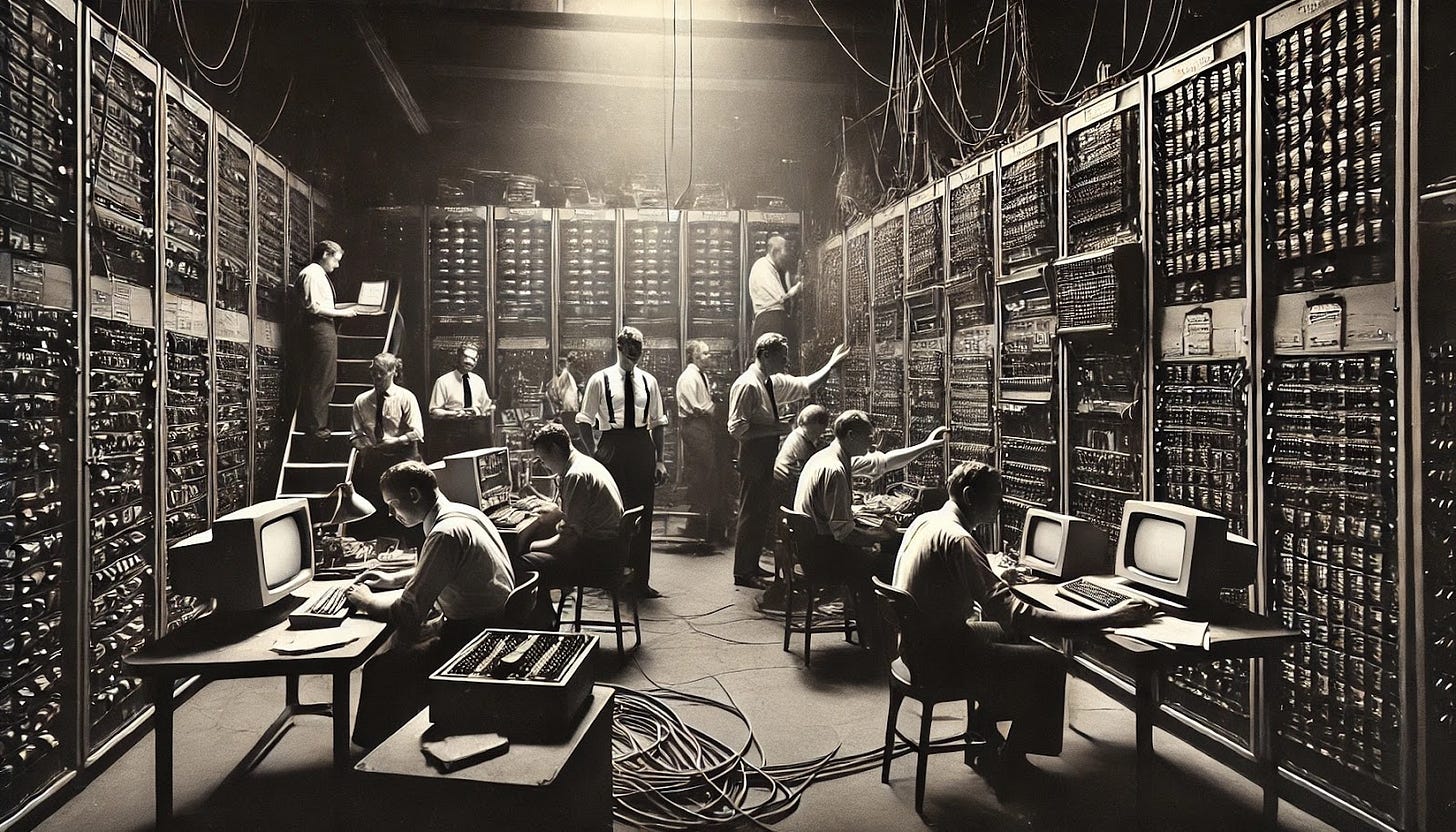

Every day since, giant mainframes running 220 billion(!?) lines of COBOL code lumber through trillions of transactions, including 95% of the card swipes across the globe’s 3.2 million ATMs and 80% of all in-person credit card transactions. Most of this code is so old that companies seek after so-called ‘COBOL Cowboys,’ a shrinking group of octogenarians still familiar with the ins and outs of computer punch cards.

“The entire GDP of the world is in motion in the [banking] network at any moment in time. A bank turns over twice its assets every day, out and in. A clearing bank in, say, New York, it could be more… So a huge amount of money is in motion in the network and in big backend systems that do it. They can’t fail! If they fail, the world ends. The world ends.”

The VBA code that models risks and the COBOL that executes trades just work. These systems are too costly to maintain, too important to fail, and too entrenched to change.

Imagine a legacy payroll processing system used by a large regional bank. It’s been in operation for 40 years, and while it’s stable, its costs continue to climb. The bank has explored cloud migration for years but hasn’t found a solution to justify the risk of interrupting payroll.

Reliance on legacy code isn’t isolated to the financial industry. Companies in healthcare, pharmaceuticals, manufacturing, automotive, and utilities have stuck with legacy code simply because it still works. These corporations feel this technical debt as a massive headache—a pain point that refuses to disappear.

Do we simply lack the imagination to solve legacy code migration? Or do we lack the skills to engineer the change within acceptable risk windows? Perhaps the problem is one of emotions, and we are simply too scared to risk the consequences, even if the likelihood of those consequences is relatively low.

Certainly, one of the issues is feature bloat: we simply build too much software. We are historically bad at pruning features and removing unused functionality after releasing products. Product complexity grows over time, placing demands on teams to over-index on testing and exercise dead code paths based on the fear that anything could break everything.

This phenomenon is particularly pronounced in legacy products where the code is stale, opaque, and misunderstood. After years (or decades!) of cobbling together features, the dependency tree appears unintelligible to even the most talented human developers.

Now, AI-based code migration promises a path forward. Developers have access to sophisticated AI coding partners that analyze, refactor, optimize, and modernize the code and make it ready for a cloud-native environment–going well beyond the constraints of “lift and shift.” AI models are particularly good at analyzing large, complex code bases and finding patterns or issues that may not be obvious to developers.

AI gives Product Engineers a leg up: you can’t solve problems you don’t know about. In collaboration with AI, developers can build creative solutions to the problems posed by legacy code.

The potential here is huge—but so is the pressure.

Artisanal Migration

We’re at a Y2K-style tipping point.

Consulting giants like Accenture and Tata Consultancy Services (TCS) have launched AI-driven services for migrating legacy code, positioning themselves as leaders in modernization and migration. Accenture offers a range of tools and methods that support rapid re-platforming and migration, enabling companies to unlock the full value of the cloud. Conversely, TCS leverages an extensive IP portfolio, contextual knowledge, and next-gen cloud technology to drive successful migration projects. Yet, these approaches often feel more like temporary fixes than actual transformations.

With its Watsonx Code Assistant for Z, IBM takes a similar AI-augmented approach. IBM’s AI aims to modernize COBOL applications by translating them into Java on IBM Z systems. [Note: in 2024, this feels like migrating from legacy to slightly less legacy.] While the tool’s generative AI model has learned from 1.5 trillion tokens and supports 115 languages, its efficacy is still questioned. Once a leader in AI, IBM Watson has struggled to keep pace with the advancements of newer models from Google, OpenAI, and others. These struggles leave the industry skeptical about whether IBM’s approach is competitive enough in the AI arms race to deliver the actual transformation organizations seek.

Global enterprises are tackling legacy code migration by collaborating with universities in offshore locations to develop specialized COBOL programs. In Brazil, the INTERON COBOL Academic Program partners with institutions such as Fatec–Santos in Santos, Universidade São Judas Tadeu in São Paulo, and Feevale University in Novo Hamburgo. These collaborations aim to equip students with practical skills for managing and evolving legacy systems. Additionally, Instituto Federal Farroupilha in Panambi and Universidade Presbiteriana Mackenzie in São Paulo have joined the program to expand COBOL education. While specific enrollment and graduation statistics from these programs are not publicly available, their establishment reflects a strategic effort to address the shortage of COBOL professionals.

In India, institutions like NIIT offer COBOL specialization courses in tech hubs, including Bangalore, Hyderabad, Chennai, and Pune. These programs align with local industry needs, preparing graduates to handle the complexities of legacy systems and meet the global demand for COBOL expertise.

Beyond Brazil and India, the Open Mainframe Project, based in the United States, offers a COBOL Programming Course accessible worldwide, utilizing modern tools like Microsoft Visual Studio Code. Online platforms such as Coursera and IBM Training also provide remote COBOL training, extending access to these skills globally.

Initially, training young professionals in a technology often considered outdated, like COBOL, might seem to limit their career potential. However, this approach mirrors the origins of offshore and nearshore models: pursuing cost efficiency by engaging developers outside the U.S. who can deliver quality services. These roles have provided significant upward mobility and economic growth for communities in Latin America, India, and Eastern Europe despite being driven by goals of reducing costs and boosting profit margins.

The financial stakes are massive. Picture an organization that is the first to automate and modernize a legacy system. The impact on speed, product innovation, and cost reduction could tip market share in its favor. Entire industries could see the competitive landscape shift with the tiniest of technical advantages. A payroll provider, for example, could seize more of the market simply by reducing fees slightly—a move that’s only possible with lower operating costs derived from an optimized codebase.

Where this leaves us

Like AI, Product Engineering is emergent. Product leaders and teams are experimenting with this approach, picking up tools that add new skills and automate tasks. Most product teams sit on piles of legacy code, and the innovative ones among them apply emergent technologies, new skills, and organizing principles to get out of under the burden of the past.

The conversation about AI has turned binary. Naysayers insist AI (and especially LLMs) doesn’t do reasoning. Optimists predict AGI will arrive over the next twelve months and perform any task humans can. The more useful question is whether AI can help product teams solve problems like legacy code migration. The debate is best resolved by taking action, running experiments, and proving (or disproving) the hypotheses about the capabilities of AI collaborators.

Do the frontier models ‘understand’ the problem that financial companies face because they rely on legacy code? You tell us.

Claude, tell us a story.

Legacy Code Warriors: A Day at Sufficient Capital

Sarah Chen rubs her temples as her Excel worksheet crawls to life at 6:45 AM. Her dual monitors flicker with dozens of spreadsheets, each loaded with VBA macros written years before she joined Sufficient Capital. Some of the code comments date back to 2003, artifacts from the days when George W. Bush was still in his first term.

"Come on, come on," she mutters, watching the familiar "Not Responding" message flash across her screen. The morning's portfolio rebalancing calculations need to be done before the market opens, and her Excel workbook is choking on twenty years of accumulated macros and circular references.

Finally, the numbers populate. Sarah quickly scans the positions. Her team needs to execute several large trades before the morning volatility kicks in. She clicks a button labeled "SEND TO TRADING" – a innocent-looking cell that triggers a cascade of legacy technology:

First, her VBA script exports the data to a text file format specified in 1998. This file is picked up by a middleware application that converts it to COBOL-compatible punch card format. The data then flows through seven different mainframe systems, each one a critical link in the transaction chain. The oldest mainframe, affectionately nicknamed "Old Bessie" by the IT department, has been running continuously since the Reagan administration.

Sarah's phone rings. It's Dave from IT.

"Hey Sarah, seeing some lag in the trading pipeline this morning. Old Bessie's having one of her moments. We've got operators working on it."

Sarah glances at her watch. 7:23 AM. The market opens in just over an hour.

"Dave, I've got $2.8 billion in trades that need to clear. What's the backup plan?"

Dave's laugh is tired. "Same as always. We'll reboot the auxiliary systems and pray. We've been trying to get approval to upgrade these systems for five years, but you know how it is. Nobody wants to touch working code, even if it's held together with digital duct tape."

Sarah opens another spreadsheet, this one containing fallback procedures for when the mainframe acts up. The document is so old that its creation date shows as "1/1/1970" – the Unix epoch time start date. Like much of Wall Street's infrastructure, it's a digital fossil that somehow remains crucial to the modern financial world.

At 8:15 AM, Dave calls back. Old Bessie is humming again. Sarah's trades begin flowing through, each one passing through a technological time machine: from her modern Bloomberg Terminal, through Excel VBA, through conversion layers, and finally into mainframe systems that were cutting-edge when "Miami Vice" was still on TV.

By 9:30 AM, as the opening bell rings at the NYSE, Sarah's portfolio rebalancing is complete. She takes a sip of now-cold coffee and opens her calendar. There's a meeting at 10: "System Modernization Planning - Phase 1 (Meeting 47)." She's been attending these meetings for three years. They always end the same way: with everyone agreeing that they need to upgrade, followed by someone calculating the cost and risk of replacing systems that process trillions of dollars in transactions.

Sarah minimizes her window of blinking Excel sheets. Behind it is another spreadsheet, one she's been quietly working on: "Resume_2024_Final_v3.docx." Maybe the hedge fund down the street has newer technology. But deep down, she knows they're probably running the same ancient systems, different only in their nicknames for their own Old Bessies.

The financial world turns on, digits flowing through decades-old conduits, empires built on COBOL and VBA, too big to fail and too entrenched to change.

Brilliant! Not only do you explain the challenges with legacy code, and the incredible vulnerability of the banking system, the healthcare system, etc. - even if not their total failure - you do it in a simple, accessible and easily understood way. As I read your posts, I realize that one can extrapolate from this field to many others where inertia, risk averseness and fear are in the driver's seat. Keep your thinking coming, I for one welcome it immensely!