Enter Player 4: ‘agentic’ software and a new product reality

Back after a little hiatus.

It’s been a hectic start to the year. January was chock full of planning, days spent in conference rooms brainstorming, scribbling on whiteboards, and hacking through the proverbial underbrush to find the most straightforward path to a successful year.

One theme appeared consistently despite company size, industry, and product focus: AI is changing the world under our feet. You must do A LOT of research daily to stay on top of the furious pace of change. Much of the innovation is, at face value, hard to make sense of or figure out how to use. Some of the most important questions facing any product organization are whether it’s even possible to match the rate of change coming out of the AI industry and how you should build an AI product strategy amidst the chaos.

In the article that kicked off this series, we examined AI’s effects on product organizations. Organizational structures are flattening, traditionally separate roles are merging, and the kinds of software we build are changing. These changes are happening faster than expected, driven by an avalanche of AI innovation and tools that have eclipsed anything we’ve seen.

The AIs we used in 2023 and 2024 were like level 1 non-player characters, running vulnerable across the tundra with no equipment. 2025’s AIs have memory and tools, and we are about to put them to work.

Enter Player 4

Humans without tools are stargazers. Humans with tools are astronomers.

Let’s apply this no tools vs. tools principle to AI.

In 2023 and 2024, it was possible to be guilty of something akin to AI-washing. Depending on your definition of the phenomena, adding AI to your product was the equivalent of telling the world you’d incorporated an AI model that could explain what was happening in your product when, in fact, you already had things like dashboards or alerts that did exactly that.

Some companies overhyped their AI’s capabilities. Most companies, though, simply woke up to the fact that generative AI was a thing and didn’t know what to do with it. Because the models lacked the ability to remember or use tools, the experiments using most models gave underwhelming results.

Foundation models provide, well, foundations. None of the labs has the domain expertise to prescribe how to use their models, leaving the rest of us to figure it out. Match that to the fact that 2023’s and early 2024’s LLMs didn’t have tools at their disposal, and you were left with this:

AI Lab: This model can perfectly recognize all the stars in the night sky!

You: Cool, but I’ve already got a person with a space telescope.

Gradually, throughout 2024, genuinely useful products started appearing, truly AI-native things that solved specific and interesting problems. Generative AI is pretty good at math, science, and coding, and some of the first tools that arrived on the market helped non-coders develop software and made experienced engineers fantastically productive. Standalone coding assistants like Cursor, Replit, Windsurf, Bolt, and others grew like Kudzu, while major platform providers like GitHub quickly added (slightly less capable) copilots.

Models advanced slightly, and suddenly, useful tools were everywhere, extracting action items from Zoom meetings, building sophisticated marketing plans, writing all your company’s copy and social media posts using a consistent voice, and making web design available to anyone. [The Neuron curates this nifty list of tools.]

Meanwhile, foundation models developed at a furious pace.

In June, Anthropic’s Claude 3.5 Sonnet raised the bar on most LLM benchmarks and made coding accessible to a non-technical audience. Product managers had in their tool bag a way to quickly build interesting feature prototypes, with Claude often writing the code completely unprompted. Plugged into a coding assistant like Cursor, Claude built even richer and more functional prototypes that you could run locally on a laptop or a cloud platform.

In September, OpenAI released the first of a series of reasoning models, starting with o1. In October, Anthropic countered with computer use for Claude 3.5 Sonnet, arguably the first ‘agentic’ model (if you simplify the ‘agentic’ definition to mean a generative AI model–reasoning or otherwise–with the ability to use tools like your browser).

At the end of 2024, things changed dramatically.

In December, Google released Gemini 2.0. OpenAI updated o1 to be multimodal and released an even more advanced model, o3, just fifteen days later.

In January 2025, OpenAI gave us Deep Research and Operator (their answer to Anthropic’s computer use); DeepSeek blew the doors off the technology market with a distilled model developed on the cheap that is open-weight and open-source. A few days later, Stanford researchers released a reasoning model called s1 that ‘thought’ longer and made DeepSeek look expensive, considering they trained it for only $30.

Less than a month later, Gemini has a memory and remembers all your chats. ChatGPT 5 is imminent, as is Claude 4 Sonnet.

If you feel like you're trying to match a marathon world record pace on the notorious ‘tumbleator,’ well, compared to the pace of AI innovation, running 200 meters every 34 seconds should feel like a relief.

Intelligence, measured

Models are getting more intelligent, sophisticated, faster, and cheaper daily.

The GAIA benchmark measures AI models based on their ability to accomplish 466 complex, multi-layered, real-world scenarios. Humans score 92% against the benchmark. Last July, Claude 3.5 Sonnet led the pack at 22%. Today, OpenAI Deep Research leads with 67%.

Designed to be an even more difficult and representative test of human intelligence, Humanity’s Last Exam scores the models on their ability to solve complex, closed-ended questions designed by mostly PhD-level contributors. When the paper was submitted three weeks ago, most models scored in the single digits. This week, OpenAI Deep Research scored 26%, making the designers’ prediction that some models might exceed 50% accuracy by the end of 2025 seem, um, a tad conservative.

[Note: most models have already surpassed humans on the specialty benchmarks measuring math, science, and coding.]

Meanwhile, the industry is pouring money into research, development, and infrastructure, with Amazon, Google, Meta, and Microsoft planning to spend $325B on AI technologies and data center expansion in 2025. This figure doesn’t even include the $100B from Stargate (who names these things?) and the $40B from OpenAI and Softbank. xAI has already built a 200,000 GPU cluster. And AI startups raised $110B in 2024, up 62% from the previous year, bringing the five-year total to $353B.

The purpose of all this investment–especially building multi-gigawatt data centers running training clusters of up to 1 million GPUs–is to create models that will make the current generation seem childlike. It’s hard to fathom what the models trained on these giant clusters will be capable of, considering the exponential pace of growth over the last 18 months. 2025 is unlikely to present many opportunities to pause and catch your breath.

People who claim that development is slowing, models aren’t reasoning, and we’ve hit AI’s scaling walls simply aren’t paying attention.

[Note: the inspiration for most of the preceding research rabbit hole was inspired when I stumbled across this podcast featuring Dylan Patel from semianalysis and Nathan Lambert from ai2. Warning: this thing is over 5 hours long.]

The Nadella Effect

Satya Nadella crashed the interwebs in December 2024 when he announced that SaaS was dead. Anyway, that’s how the clickbait headline writers and podcasters interpreted what he said. In reality, what he actually said is the way we architect, build, and deliver software is fundamentally changing. Like we once innovated a cloud-native app stack, we find ourselves innovating the AI app stack. What is different this time is that decades of innovation have compressed into just a few short years.

He described what he calls model-forward Saas, his language to describe agentic systems. Agentic systems will present an entirely new user experience and novel ways of interacting with data using intelligent systems that are much more capable than the passive application logic that preceded them.

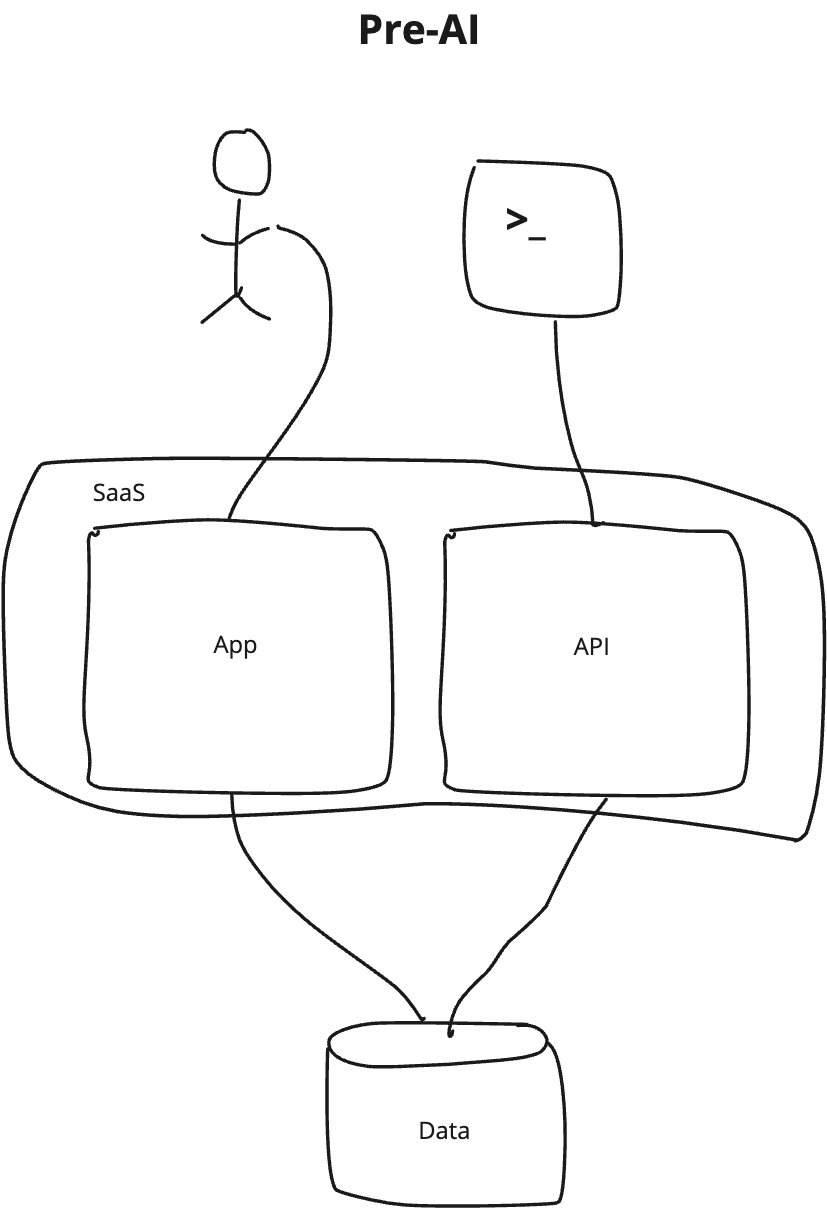

Today, we interact with data through apps, using their UIs and APIs.

Making an app fully capable takes a long time (I’m looking at you, MVPs). You have to decide which features are important, how to build them, and validate with users what good looks like. To solve this problem, we devised Product Management, and product requirements documents, and Agile software development methodologies, and backlogs of ideas about all the things we will probably never build.

Once we’ve delivered our apps into a production environment, we aren’t terribly good at giving users and systems timely and secure access. This is why we have an identity and access industry in addition to a SaaS app industry.

The functionality of these apps is static, the tasks you use them for are repetitive, and the data is fixed in shape (if not size). The questions we can ask of the data are constrained to the app’s already implemented logic. Whenever we want to ask a new question, we request a new feature. This process is maddeningly slow for the users of a product built by even the most productive and efficient product organizations. The greater consequence is how we use data lags behind our ability to update one or more tiers and an app’s code. Apps that once made us hyper-productive now hold us back.

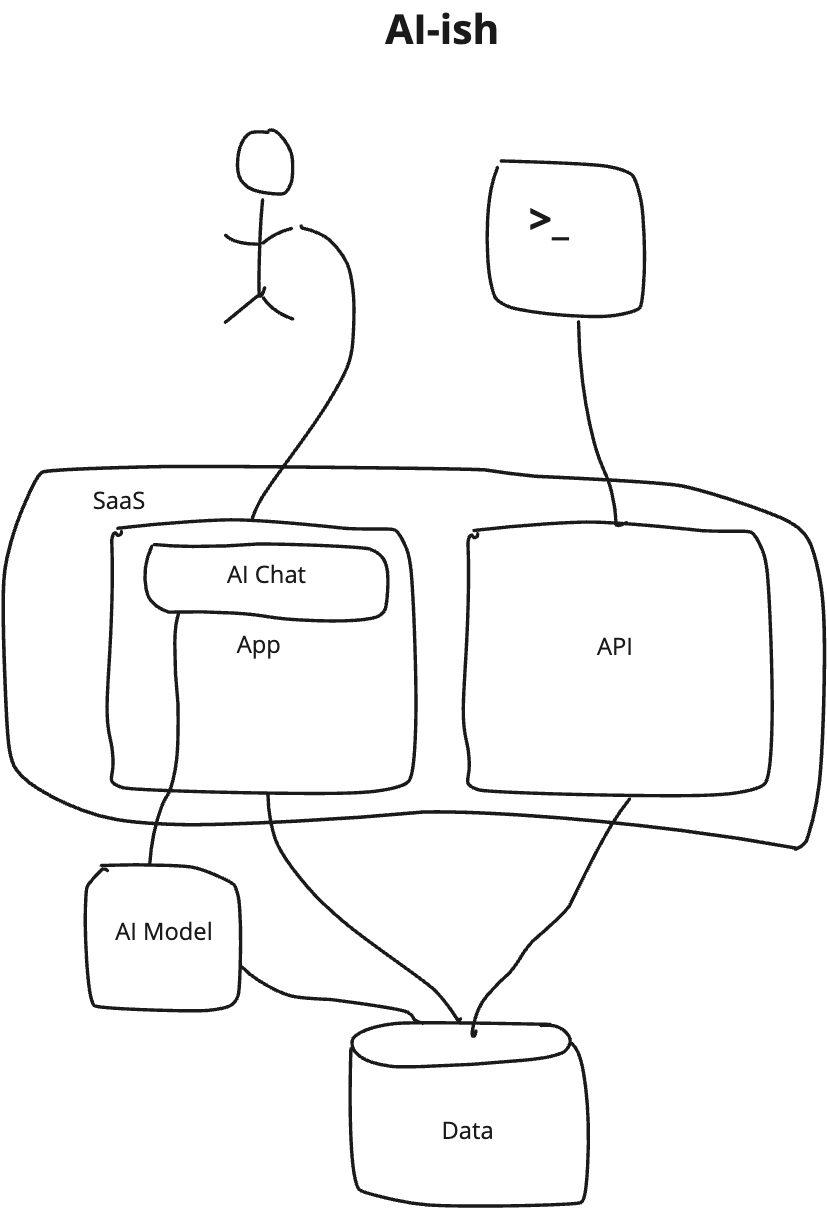

Over the last two years, companies have added AI chat interfaces to their existing products, with the effect of producing something AI-ish.

The app’s logic and underlying data constrain the questions you can ask through a natural language processing layer. The change to the user experience can be slick, asking plain language questions instead of clicking through menus, hitting buttons, and filling in search boxes, but the value delivered to the user isn’t fundamentally different. To make the AI add-on usable, the app’s developers put guardrails on it so that your questions cannot stray outside the functional boundaries of the product. This phenomenon is the likely culprit for so many underwhelming impressions of AI’s capabilities. Based on the feats we witness models performing, the limitations are clearly those of the app and its underlying data.

Nadella’s point–and others make a similar case–is the AI app stack changes the user experience, redefines the boundaries of apps, and reorients how we define, catalog, and use data.

In this agentic model, the user doesn’t interact individually with 100s of different apps. Instead, she will interact with an AI agent aware of hundreds, thousands, or millions of data sources and information repositories. You ask your agent a question, and it helps you get the answer.

You can do this because the models have advanced in three important ways: they have memory, they have access to tools, and we can entitle them to do things on our behalf.

Imagine you are the CEO and want to tell your board of directors about your company’s sales this year and the forecast for the rest of the year. You also want to predict how the market will evolve and outline a strategy for segmenting and attacking it under those new conditions. Attacking these new market segments requires new products and features, and you want to quantify the company’s opportunity relative to the costs it will take to design, develop, deliver, market, and sell these new products.

Today, you’d ask your leadership team to assemble the information and provide it to you in a dashboard or (more likely) PowerPoint presentation. Behind the scenes, your employees log into apps, click and type, search and sort, download, and format until you get the answer concisely.

Using an AI app, the agent’s interface is conversational, using a combination of text natural language processing and voice, through which you ask your agent questions. It devises ways to access the data, manipulate it for you, and fill in gaps in information that you didn’t think to ask for. Your agent interface might ask other agents for information they have and ask the AI app’s agent to query other data sources–perhaps using an agentic API when legacy REST APIs aren’t available.

The agent presents its answers back to you, and you ask it more interesting questions. When you feel your update is ready, the agent generates a video summary and sends it to the board. Your board members ask their agents to interpret, summarize, and prepare follow-up questions.

The above is just one example among dozens of ways in which agentic systems will change how we live, play, and work1.

For early clues about what this might look like, check out deep research and Operator by OpenAI.

I’ve heard some people describe these eventualities as speculative, but that simply isn’t true. These tools are available and at our disposal today.

A month after describing model-forward SaaS and how they enable agentic systems, Nadella reorganized large chunks of Microsoft around this new AI app stack paradigm, with the goal of making cloud infrastructure Microsoft’s largest business. Interestingly, he also reclassified what the cloud infrastructure business sells, saying it was moving away from selling web application servers–the backbone of the SaaS industry–to selling AI application servers.

Once people start getting paid to change something, it’s going to change.

So, what should product teams do with all this?

Think about where your business and products are today, then do a working backward exercise and imagine the most fantastic outcomes possible. [Hint: Use AI for this, especially the advanced reasoning models like o1 or o3 with deep research.]

What would your product strategy be to arrive at one of these theoretical outcomes? Do you have the skills, tools, and infrastructure to execute your strategy? How will you change the way you design, develop, and deliver your products? How do you deliver value to your customers with existing products while you effect the changes to transition to an AI app stack?

Product teams should immediately take several actions:

Identify the tools that will enhance the productivity and efficiency of their product organization.

Give someone the full-time job of experimenting with how to add AI to a product in a way that delivers real value to customers.

Form a small team to examine the implications of the AI app stack for your products and develop a plan for dealing with them. Give yourselves ninety days.

Earlier in the series, we talked about building Learning Engines to turn product managers into full-stack engineers and developers into go-to-market strategists. In the short time since making those recommendations, the tools at your disposal have become even more powerful. Your likelihood of success with these experiments has increased exponentially.

Have you taken advantage of that?

Imagine AI agents acting on behalf of users:

Finance: Proactively rebalancing investment portfolios, optimizing returns based on your objectives, identifying tax-loss harvesting opportunities and deductions, and generating personalized financial reports.

Health: Manage wellness plans by orchestrating diet, scheduling checkups and doctor’s appointments, complete with pre-filled sign-in sheets and insurance information, tracking fitness goals, and reminding you to take medication, all tailored to your health profile.

Small Business Management: Handling invoices, accounts payable and receivable, travel expenses, SBA loan applications, accounting, payroll, benefits management, hiring, onboarding, and training. AI agents can also schedule meetings during your preferred time slots, freeing your schedule for deep work and strategic decision-making.

Running the Household: Ordering groceries, birthday reminders with gift ideas, ensuring your EV is charged (or your car fueled), scheduling routine maintenance, minimizing insurance premiums, managing utility payments, optimizing energy bills, scheduling vacuum cleaning that avoids disrupting sleep or daily routines, and preempting household crises like “flat tire mornings.”

Family Schedule: Coordinating family schedules with precision to ensure soccer practices, piano lessons, tutoring sessions, haircuts, walking the dog, pet grooming appointments, and social events don’t overlap while managing Uber rides and nanny schedules.

Vacation Planning: Creating optimal itineraries and booking airfare using rewards miles when possible, reserving an Airbnb with an in-house cook already scheduled, arranging car rentals or transportation, organizing pet boarding, and planning outings with reservations for attractions and restaurants.

Hit the jackpot again, kudos! Insightful, well crafted and beautifully written especially where you wax poetic like stargazers vs astronomers. I loved this post, thank you 🙏